Our product seamlessly integrates responsible AI into your organization. Identifying where you stand and what must be done to avoid risks and penalties and be compliant to EU regulations when using AI as part of your business.

Do you want to know more about anch.AI? Fill the form below and we will get back in touch shortly.

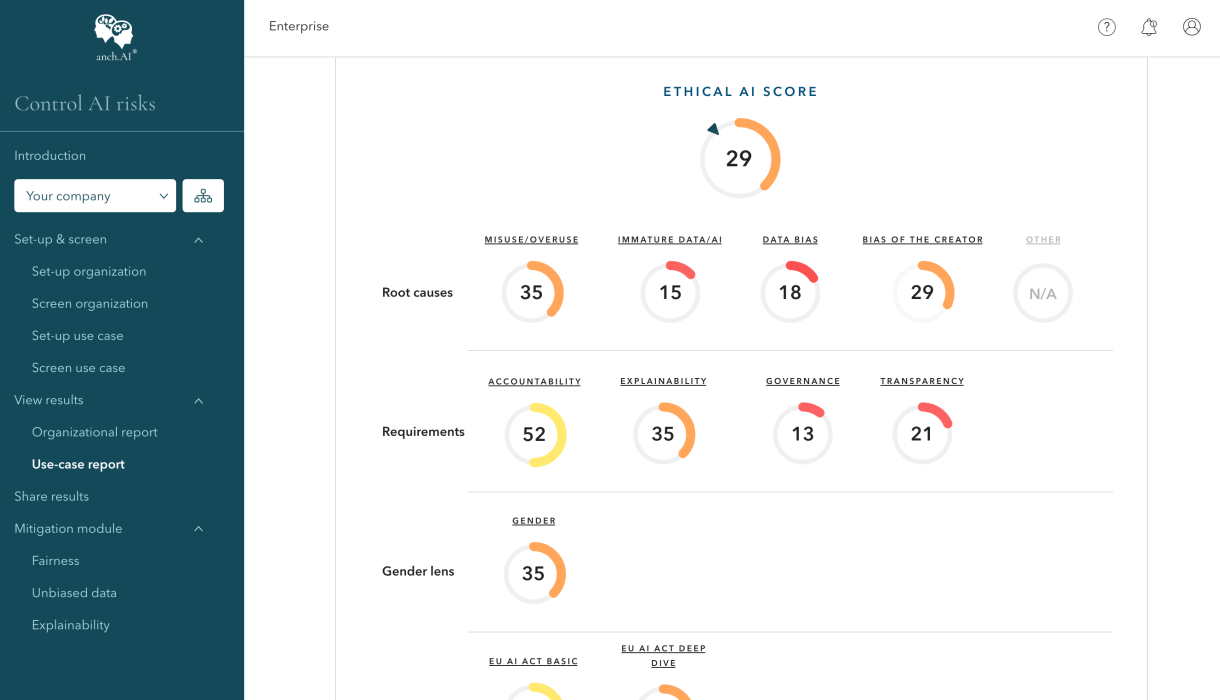

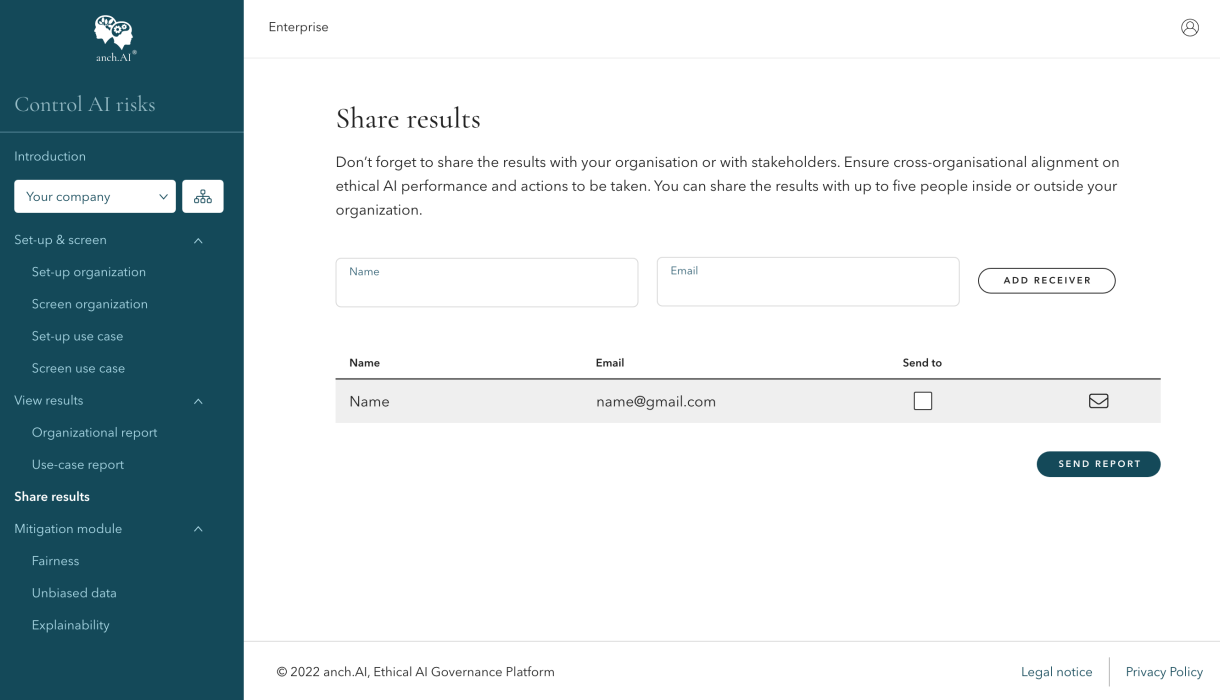

Self assessment tool with dashboards presenting your ethical AI profile, such as root causes, accountable functions/roles and vulnerabilities towards responsible AI requirements.

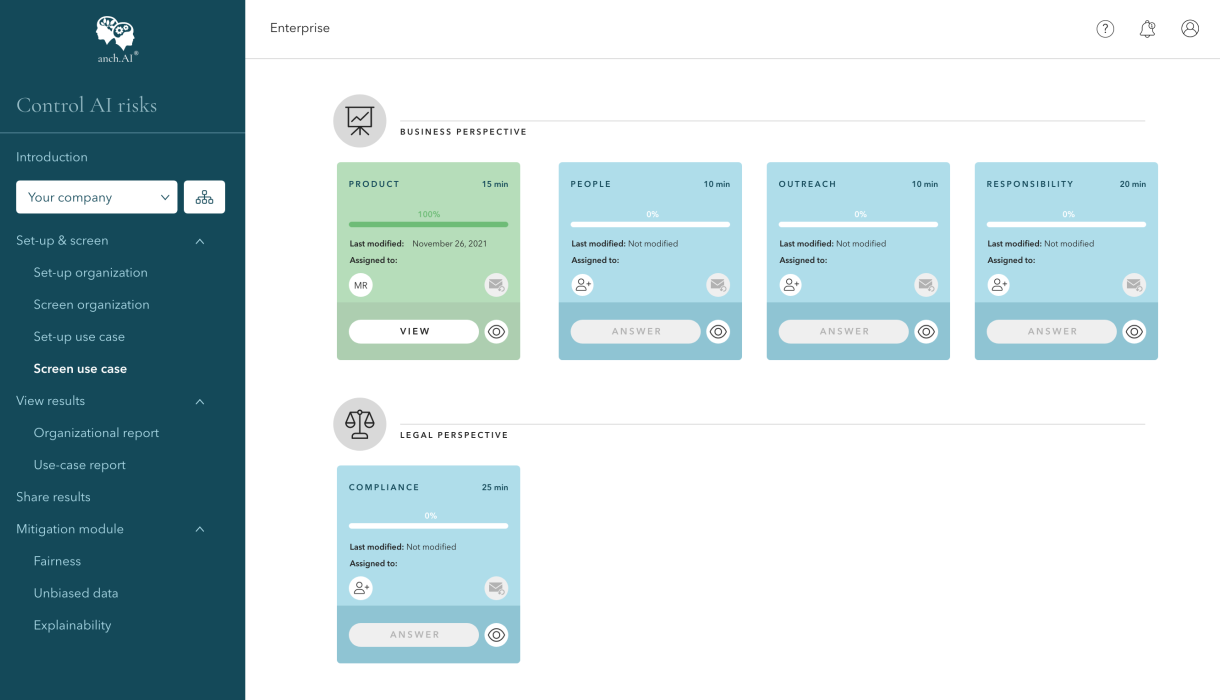

Screen a specific AI solution for ethical and legal pitfalls, and/or your entire organization for Responsible AI maturity.

• Choose additional deep dive lenses if needed

• Gender equallity

• Upcoming EU regulation on AI

• Generative AI

Our AI prediction and recommendation tools assess what ethical and legal AI risk(s) you are exposed to. Our gap analysis feature detect and measure potential deviations from your organizational values and guidelines in your AI solutions.

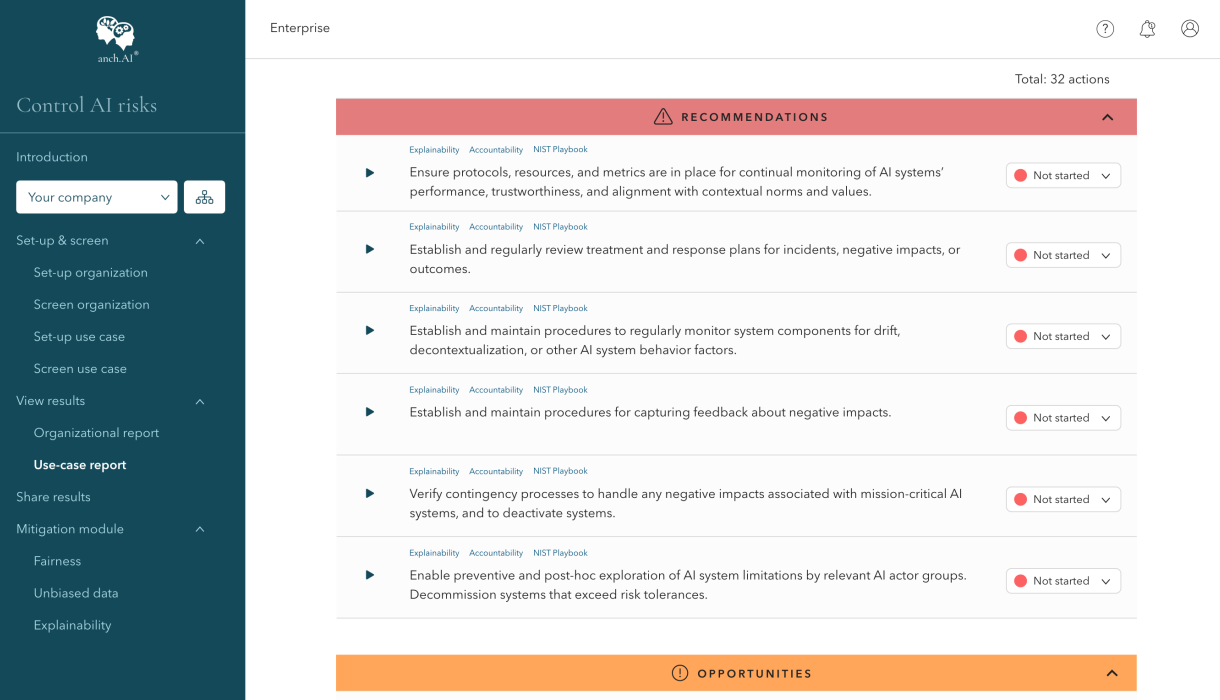

Get tailored recommendation as well as leverage mitigation tools based on your specific risk exposure. Choose from a variety of available tools, tailored for specific risks.

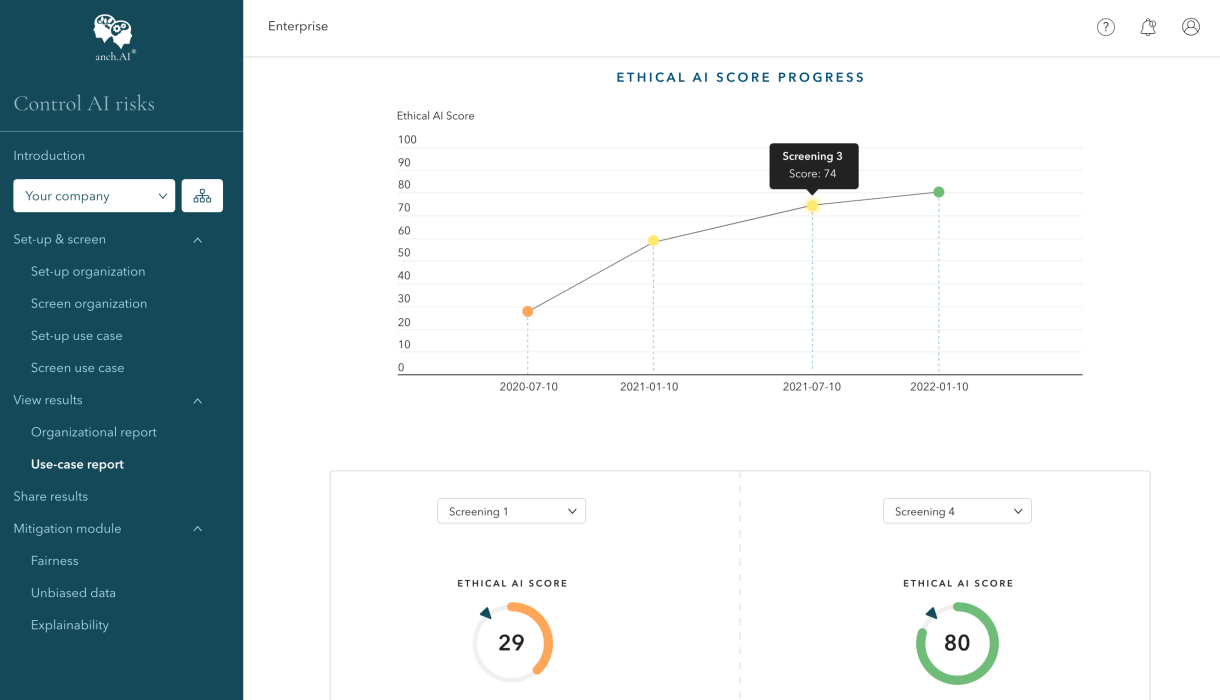

Re-screen your AI solution and/or your organization to validate risk reduction progress on your responsible AI performance. Verify your organization’s responsible AI Performance continuously. Get a maturity benchmark for internal and external use.

Our reporting features are easily plugged in to your GRC platform or internal governance structures. For example:

© 2023 anch.AI AB, all rights reserved